After a night of work on a flight, I managed to use the conventional neuroevolution (CNE) trainer module I created to train a box2d based double-pendulum-cart environment to self balance the a double inverted pendulum. This was something very difficult to accomplish with normal PID controllers. I think if I wanted to build a deterministic controller for this system, I could have used a more complicated LQR controller which would have handled the complexity of the system, but what I really wanted to accomplish was not to balance the pendulum, but rather train a network to learn about some environment and learn to adapt in that environment to achieve some task, so that these techniques can be applied to other more challenging tasks in the future.

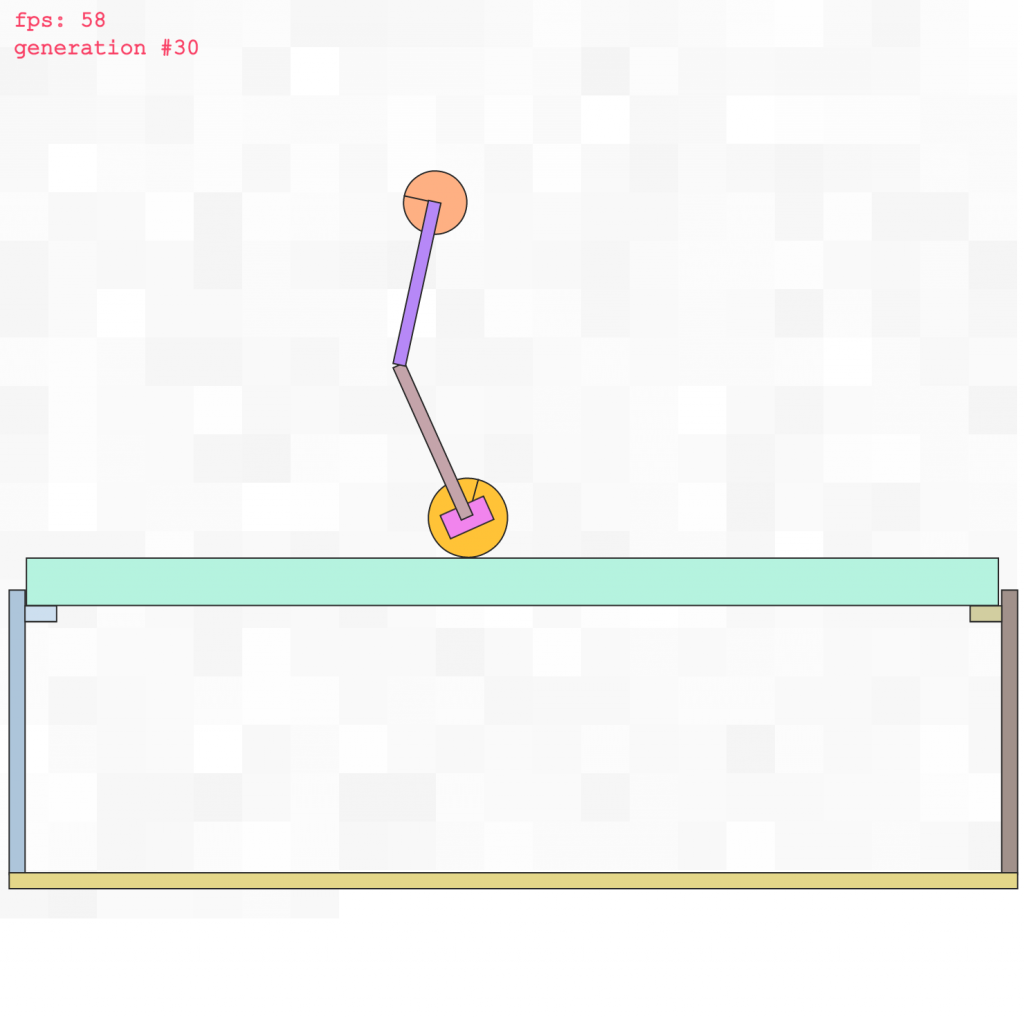

It seems that using neuroevolution based algorithms is a good start in these task-based simulations. After 100 generations or so of training, it started to be able to rebalance itself, despite being a highly unstable non-linear system with multiple joints. Below is a demo of the trainer used to train the network to self balance. One can start seeing progress after 100 generations.

The final result of the trained neural network can be viewed in this demo, or in the instagram video below:

While the result worked, and the double inverted pendulum does rebalance itself for a while, I’m not entirely satisfied with the result, as depending on different starting conditions, the network will fail. I also had to hack it with two pre-trained networks, one to swing the thing upwards, and the other to maintain the position. I feel that I need a better approach to train the network so that it can do both tasks with one net, and do it well. Also, eventually it will lose balance and fall to one side after a while. I have to think about next steps to improve the level of sophistication in its thinking.

The next steps to follow is to implement more sophisticated GA algorithms that are more powerful than CNE (conventional neuroevolution). The problem with CNE is that progress stops after a while, since the entire population loses diversity from reproducing itself just with similar sibling networks (……..). Eventually one still ends up with a local optimum as most solutions look similar to each other after many crossover mutation training generations.

Other methods such as ESP, or NEAT may be able to achieve more robust and stable networks. I’m going to try to tackle ESP next, since it is basically a parallel set of feed-forward networks within a larger recursive network, which is doable within the convnet.js framework. For NEAT type algorithms where the actual network structure evolves, I may need to look at other neural net libraries, or start hacking on the recurrent.js experimental code. That can open another big can of worms!