As described in a previous post, the simple conventional neuroevolution method doesn’t seem to work well enough to both swing upwards and maintain the position by balancing itself. I think the problem is that the training simply goes through many generations to get the a local optimal state that allows the pendulum to swing upwards, but that the training is always getting stuck at the local optimum, and cannot get to the global optimum state of being able to do both tasks (swing, and balance).

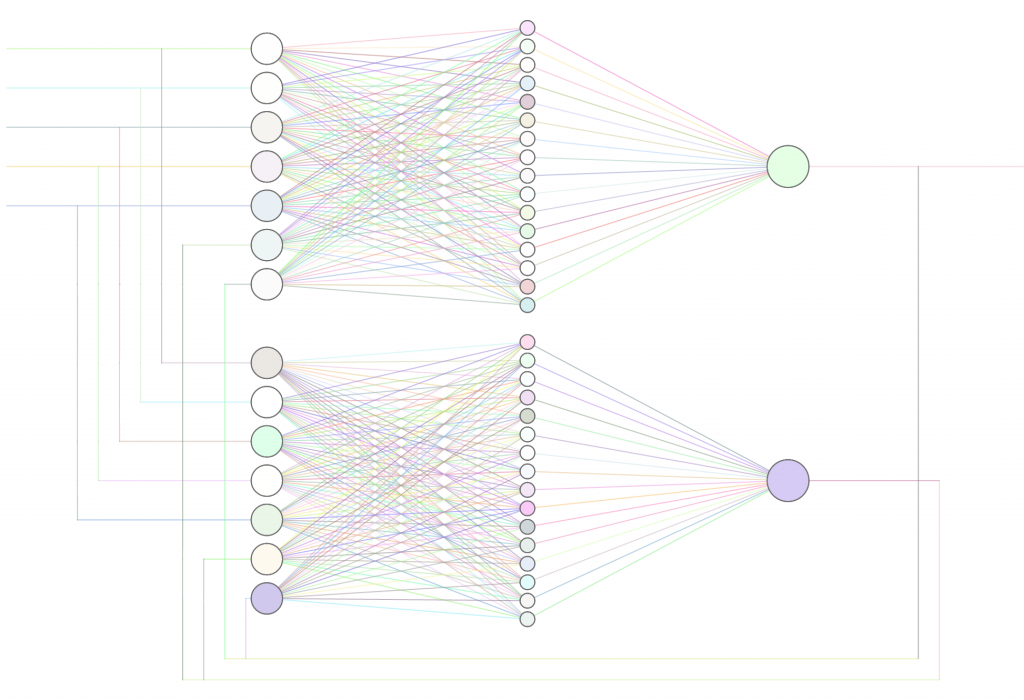

After reading the paper on Enforced Subpopulations (ESP) genetic algorithm method for training neural nets, I decided to extend our GA add-in library to allow train a recurrent neural network architecture similar (but not exactly) specified in the paper. However, rather than sticking with the convnet.js neural net class, I extended the network by defining a certain type of recurrent network called ESPnets that I can train to accomplish a task. The network I ended up using to balance the neural network looks like this (implemented using convnet.js neural network library):

Basically, the 5 inputs are fed into two sub feed-forward networks (upper angle, upper angular velocity, lower angle, lower angular velocity, position of cart). The output of the first network will be used to control the speed of the cart’s motor. The output of the second network will be a hidden ‘internal state’. Both outputs are the fed back to both networks as 2 extra inputs, making this a recurrent neural network.

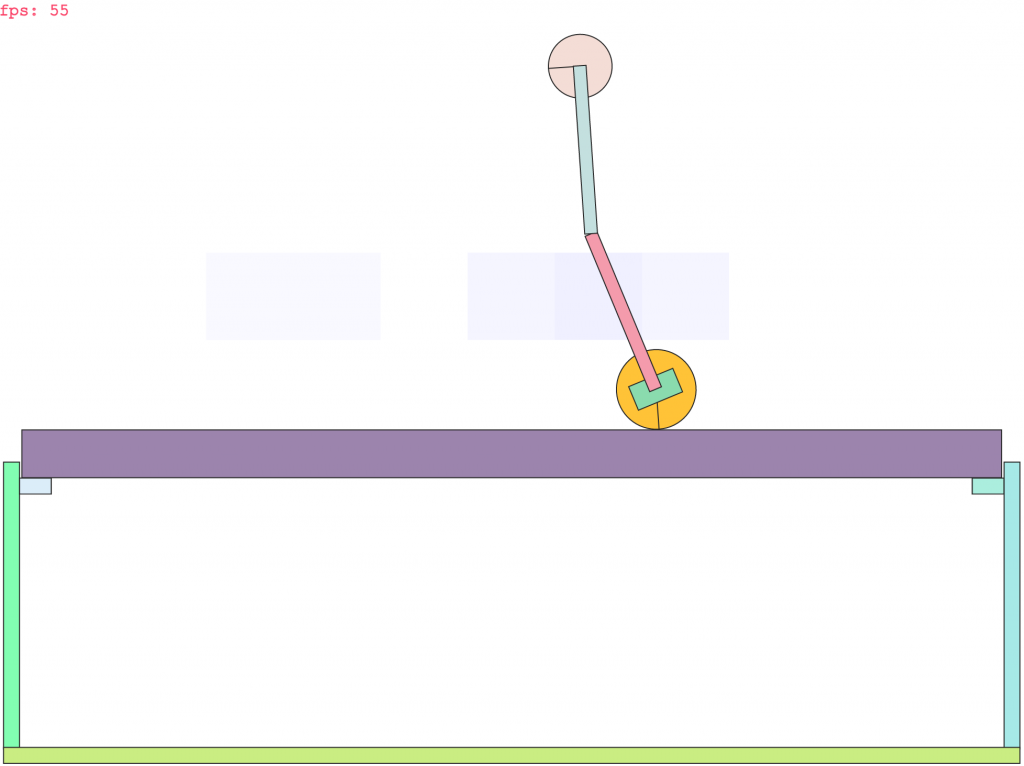

When training this network, I trained it to both balance the pendulum, and swing it up, at the same time (multiplied the fitness of both tasks as a final fitness). This approach did not work in conventional neural network (GATrainer class earlier in the older example), but finally works for this type of segmented network! See below demo:

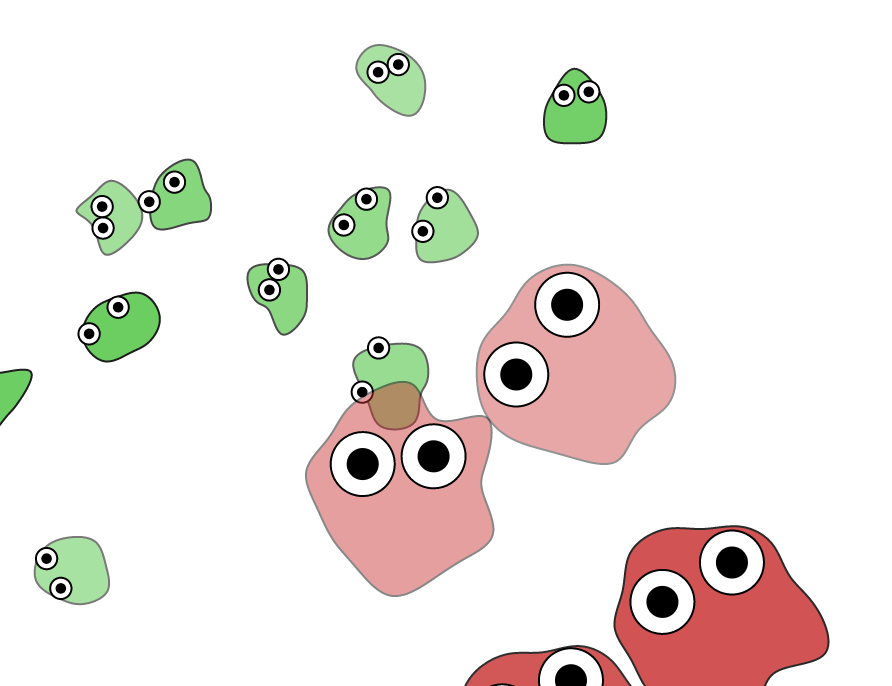

The reason it is believed to have worked, is because, during training, the top network and the bottom network will somehow be trained to solve different problems, and the magic is that they are trained at the same time to work with each other during training. The theory behind ESP algorithm is that one can optimally train a bunch of different subnetworks, each will be diverse, and able to tackle a sub problem, and maintain overall diversity, so when put together, the network can be a lot more powerful to solve general problems. Even though certain sub networks can be weaker in general to other sub networks, they are basically battling their own kind of sub network for survival in simulated genetic reproduction, rather than battling other sub networks for overall fitness, hence diversity in the solution space is maintained.

I see this approach in other more interesting algorithms such as NEAT as well. The key seems to be the ability to keep a wide variety of ‘species’ that are good in their own way, rather than just keep the best species and discarding the rest (which is what CNE does basically, and gets stuck at a local optimum solution), hence we can hope to be closer to accomplishing global optima. The ESP solution still doesn’t work too well, for example once we knock it down with a mouse chances are it cannot get back up, although I believe adding a third sub population will help. I really think other algorithms such as NEAT are more elegant and better in the end for this task though.

I could refine my implementation of ESP to do everything properly, but I think I’m going to move on to learn more about NEAT, HyberNEAT, CPPNs next, as they seem to be a lot more interesting neuroevolution algorithms to me.

The concept of global/local optimality, and this diversification theory is quite deep to me. Makes me think about life’s philosophy, and one’s approach to life. It may even apply to leadership and management, where in groups of people or teams, a diverse one can tackle a problem giving a much better solution eventually, while a non-diverse group of similar experts may only be able to give a good solution. It always helps to keep different people with different strengths and weaknesses as a team, rather than have a team of all A-players in a single field, especially when trying to come up with innovative solutions to problems. The biggest mistake I see in the workplace these days is that people tend to strive to hire people like themselves, producing teams of clones that do not innovate because diversity is lacking.