The frog of CIFAR10?

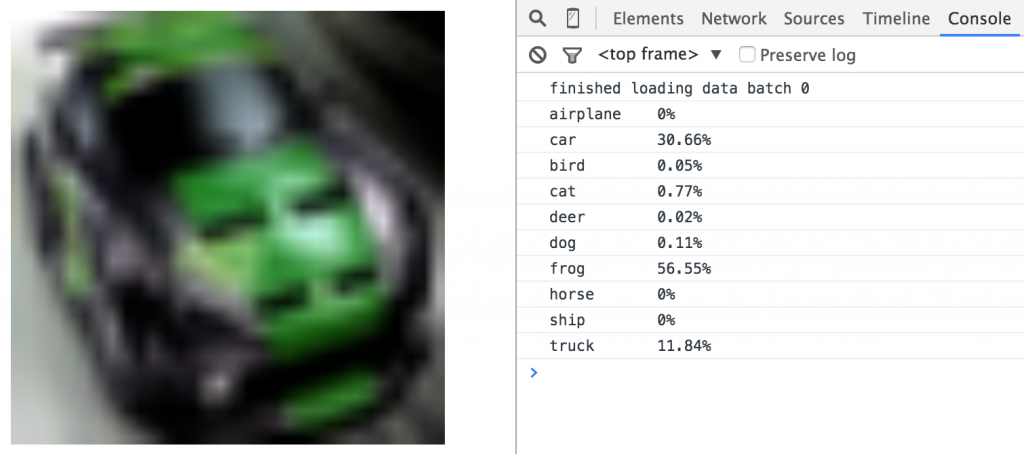

karpathy’s javascript convnet image recognition demo is quite neat and achieves ~ 80% accuracy on CIFAR10 dataset. It’s certainly not state-of-the-art, but it’s not that far from it, and we can easily hack and poke it around in JS to experiment with it.

Here, I striped out the trained network and have it show random pictures from the test set, to get it to predict the correct class from ten categories. The human brain would interpret this as nothing less than a Porsche GT3, while convnet.js thinks it is a frog with 56% probability (but a car or trick with combined ~ 43% probability, so not that bad).

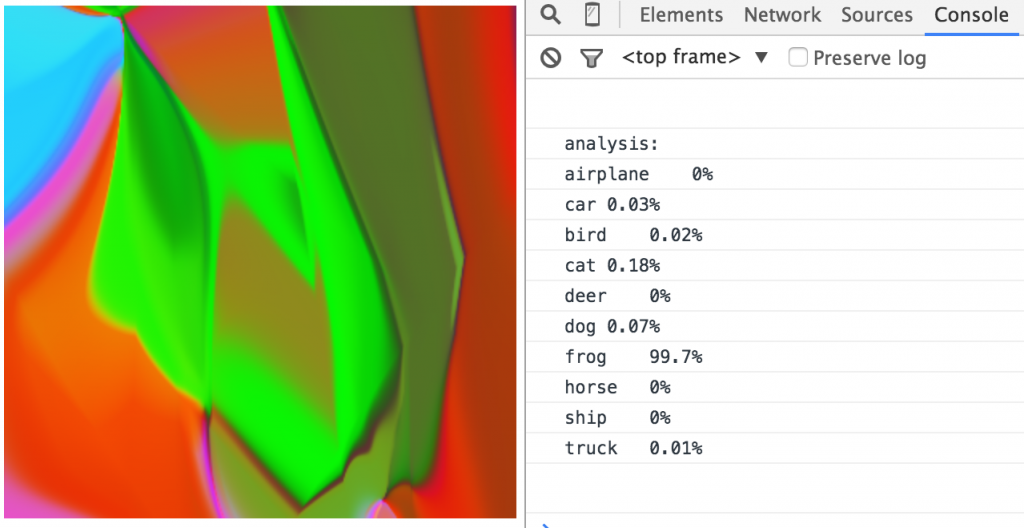

What happens if I try to apply some simple neuroevolution algorithms on the neural network art generator to optimise for, say, frog classification?

Doesn’t look that too much like a frog yet, but still cool to look at.

The problem with this approach is that it is too easy to fool a convnet to think it is seeing a particular class of image. There are some recursive methods that have been employed recently by google research and FAIR that use competing networks to generate natural looking images, that I want to try out. For example, network A is trained to identify natural real images from fake generated ones, while network B is trained to generate fake images that gets classified as natural images by network A. Then have them keep on evolving to beat each other, kind of like how the agents in neural slime volleyball learned to play the game via competition.

I think there’s tons of interesting work that can be done in this space. Will need try to refine these models.